Named Entity Recognition (NER) is task of text processing to process unstructured text and extract predefined named entities from that text like person names, oganizations, locations and many other type of entities. It helps to process large unstructured data very fast and get insights from data. There are different kind of libraries and models available for usage and can also be retrained or fine-tuned to meet requirements.

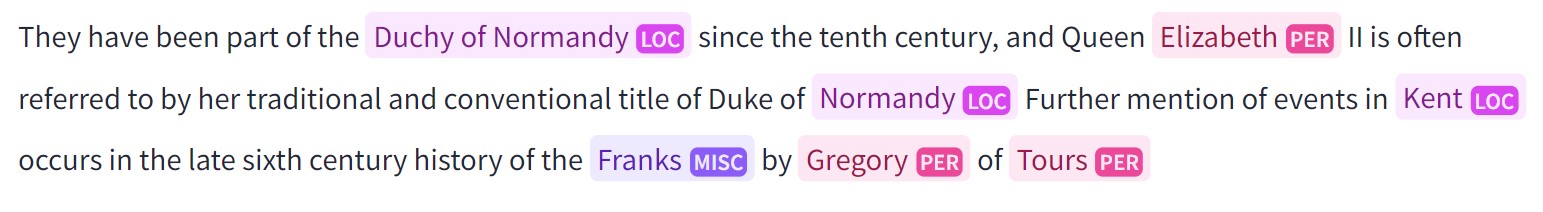

In this example, we are using a fine-tuned bert model from huggingface to process text and extract data from given text. This model is trained for four entities such as person, organization, location and misc entities. We can use it to extract data for location names, organizations and person name etc. For more details on this model regarding training data, model performance and other training details, view this page.

https://huggingface.co/dslim/bert-base-NER

For getting started, we need to install transformers package from pip and other required packages.

pip install transformersOnce, all requirements are installed, we can load pretrained model. It check if there is a model and tokenizer available on path, it loads that model otherwise load them from internet.

from transformers import AutoTokenizer, AutoModelForTokenClassification

from transformers import pipeline

# load model and tokenizer

tokenizer = AutoTokenizer.from_pretrained("dslim/bert-base-NER")

model = AutoModelForTokenClassification.from_pretrained("dslim/bert-base-NER")Now, once both model and tokanizer are loaded, we can create a pipeline which will accept a sentence and return results and then input a sentence to pipeline and get output.

nlp = pipeline("ner", model=model, tokenizer=tokenizer)

# input example sentence

example = "My name is Wolfgang, I work in Microsoft and live in Berlin"

ner_results = nlp(example)It returns list of entities recognized from model as objects with entity type, entity value, score and entity position in sentence.

[

{'word': 'Wolfgang', 'score': 0.9990820288658142, 'entity': 'B-PER', 'index': 4, 'start': 11, 'end': 19},

{'word': 'Microsoft', 'score': 0.9986439347267151, 'entity': 'B-ORG', 'index': 9, 'start': 31, 'end': 40},

{'word': 'Berlin', 'score': 0.999616265296936, 'entity': 'B-LOC', 'index': 13, 'start': 53, 'end': 59}

]Now, this data can be used to get insights from any text datasets. For more details and updates on this model, you can check link above for this model.

Comments (0)